Researchers Upend Ai Status Quo by Eliminating Matrix Multiplication in Llms Ars Technica

Researchers upend AI status quo by eliminating matrix multiplication in LLMs | Ars Technica #

Excerpt #

Running AI models without floating point matrix math could mean far less power consumption.

The researchers’ approach involves two main innovations: first, they created a custom LLM and constrained it to use only ternary values (-1, 0, 1) instead of traditional floating-point numbers, which allows for simpler computations. Second, the researchers redesigned the computationally expensive self-attention mechanism in traditional language models with a simpler, more efficient unit (that they called a MatMul-free Linear Gated Recurrent Unit—or MLGRU) that processes words sequentially using basic arithmetic operations instead of matrix multiplications.

Third, they adapted a Gated Linear Unit (GLU)—a gating mechanism to control information flow in neural networks—to use ternary weights for channel mixing. Channel mixing refers to the process of combining and transforming different aspects or features of the data the AI is working with, similar to how a DJ might mix different audio channels to create a cohesive song.

These changes, combined with a custom hardware implementation to accelerate ternary operations through the aforementioned FPGA chip, allowed the researchers to achieve what they claim is performance comparable to state-of-the-art models while reducing energy use. Although they ran comparisons on GPUs to benchmark against traditional models, the MatMul-free models are designed to operate efficiently on hardware that is optimized for simpler arithmetic operations, such as FPGAs. This suggests that these models could potentially be run on various types of hardware, including those with more limited computational resources than GPUs.

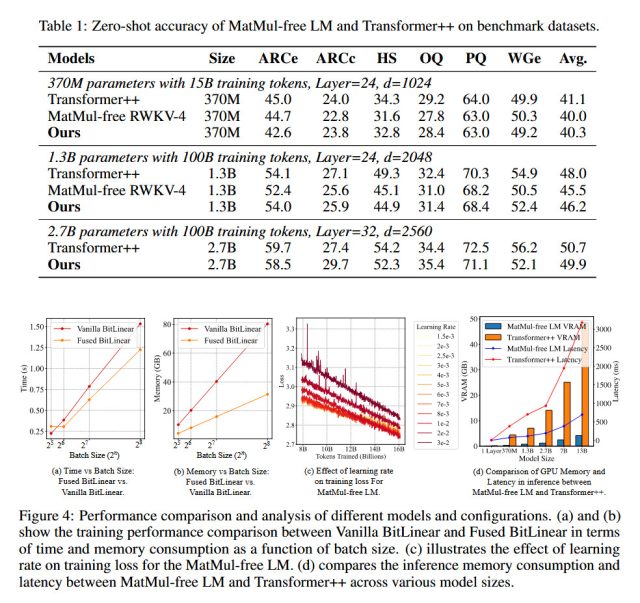

Enlarge / This chart taken from the paper shows relative performance of the MatMul-free LLM compared to a conventional (Transformer++) LLM on benchmarks.

To evaluate their approach, the researchers compared their MatMul-free LM against a reproduced Llama-2-style model (which they call “Transformer++”) across three model sizes: 370M, 1.3B, and 2.7B parameters. All models were pre-trained on the SlimPajama dataset, with the larger models trained on 100 billion tokens each. Researchers claim the MatMul-free LM achieved competitive performance against the Llama 2 baseline on several benchmark tasks, including answering questions, commonsense reasoning, and physical understanding.

In addition to power reductions, the researchers’ MatMul-free LM significantly reduced memory usage. Their optimized GPU implementation decreased memory consumption by up to 61 percent during training compared to an unoptimized baseline.

To be clear, a 2.7B parameter Llama-2 model is a long way from the current best LLMs on the market, such as GPT-4, which is estimated to have over 1 trillion parameters in aggregate. GPT-3 shipped with 175 billion parameters in 2020. Parameter count generally means more complexity (and, roughly, capability) baked into the model, but at the same time, researchers have been finding ways to achieve higher-level LLM performance with fewer parameters.

So, we’re not talking ChatGPT-level processing capability here yet, but the UC Santa Cruz technique does not necessarily preclude that level of performance, given more resources.

Extrapolating into the future #

The researchers say that scaling laws observed in their experiments suggest that the MatMul-free LM may also outperform traditional LLMs at very large scales. The researchers project that their approach could theoretically intersect with and surpass the performance of standard LLMs at scales around 10²³ FLOPS, which is roughly equivalent to the training compute required for models like Meta’s Llama-3 8B or Llama-2 70B.

However, the authors note that their work has limitations. The MatMul-free LM has not been tested on extremely large-scale models (e.g., 100 billion-plus parameters) due to computational constraints. They call for institutions with larger resources to invest in scaling up and further developing this lightweight approach to language modeling.

The article was updated on June 26, 2024 at 9:20 AM to remove an inaccurate power estimate related to running a LLM locally on a RTX 3060 created by the author.