Ai Won't Eat Your Job, but It Will Eat Your Salary

AI won’t eat your job, but it will eat your salary #

Excerpt #

On rebundling jobs and skill premiums

The AI augmentation fallacy goes something like this:

“AI won’t take your job but someone using AI might.”

Instead, I posit that for the vast majority of jobs, the effects of AI will play out something like this:

“AI won’t take your job, but it will take your ability to charge a premium for it.”

In other words:

“AI won’t eat your job but it will take a nice chunky bite out of your salary.”

The question isn’t really whether machines will replace humans.

That question is one of the biggest distractions of our time.

The question that really matters is whether humans will still command a skill premium once they are augmented by machines.

And if so, which skills will preserve that premium and which ones will not.

The debate on the effect of AI on jobs is repeatedly distracted by the question of replacement through automation, when the factor that really drives our engagement with our careers is the ability to own skills which grant us a skill premium.

And AI is perfectly poised to attack not your job, but the ability to charge a skill premium for it.

[

In particular, we look at three key ideas through this post:

Four ways that augmentation rebundles jobs

Three ways that humans attract a skill premium

Three ways that AI erodes this skill premium

Sign up here to get future editions delivered to your inbox:

Unbundling and rebundling of work #

Every job is a bundle of tasks.

Some of these tasks require specialization, some don’t, but they still remain part of the bundle as the cost of unbundling and delegating those tasks may be high.

[

Every new wave of technologies (including the ongoing rise of Gen AI) attacks this bundle.

[

Substitutes and complements #

New technology may substitute a specific task - for instance, intelligent scheduling tools may fully substitute a task previously performed by a human.

New technology may also complement a specific task - for instance, improvements in AI provide diagnostic support to doctors and radiologists, enhancing their ability to perform the task.

First order thinking suggests that technology-as-substitute (automation) is bad, and that technology-as-complement (augmentation) is good.

However, to understand whether technology has a net-positive or a net-negative impact on a job, it’s important to think through the effects of augmentation on the job bundle described above.

Depending on the effects on the bundle, the skill premium (the ratio of the wage of skilled workers to unskilled workers) for that job category may increase, decrease, or remain the same.

The skill premium and the rebundling of work #

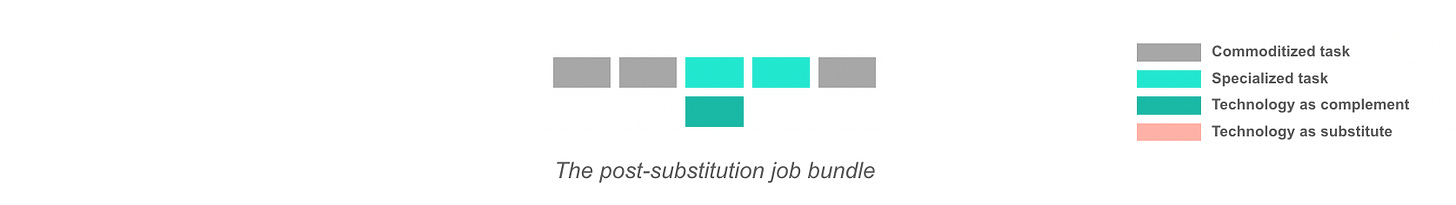

There are four possible ways in which the job bundle gets rebundled.

Scenario 1: Status quo #

First, there is no shift in value from the job bundle.

AI augmentation helps the worker but there is no substantial improvement in their ability to capture value either through productivity improvements or through levelling up to include higher value tasks in the job bundle.

[

Outcome: There are no effects on the skill premium i.e. technology has neither an upward nor a downward pressure on your salary as a skilled worker.

I believe the effects of Generative AI will skew less in this direction and more in the other three directions below.

Scenario 2: Value migration #

In this scenario, there is a shift in value towards the job bundle.

AI augmentation migrates value towards a specific job bundle, largely because the worker benefits from productivity improvements (more of the same) or because they level up to include higher value tasks in the job bundle (more of different).

[

Outcome: Your ability to charge a skill premium increases i.e. augmented by technology, you can now charge a higher salary for the same skills.

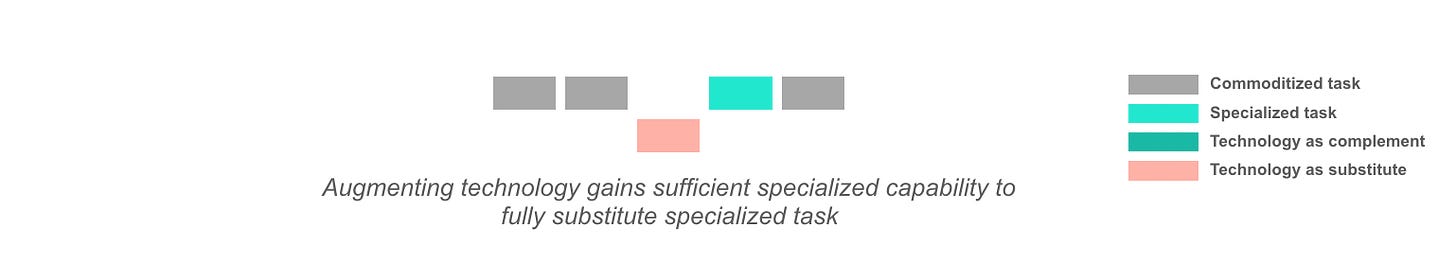

Scenario 3: Commoditization #

Commoditization - one of the lesser understood effects of augmentation - plays out when one (or both) of two factors come(s) into play:

Augmentation absorbs value from the specialized task and commoditizes the task over time

Augmentation lowers the (skill) barrier to entry to the specialized task, increasing the potential base of workers who can now perform the task. Without significant shift in value or growth in demand, this increased competition reduces the worker’s ability to capture value for the task.

[

Outcome: Your ability to charge a skill premium decreases i.e. as others in your job category increasingly use technology, your ability to sustain your salary decreases.

This scenario is very likely with AI, in general, and Gen AI, in particular, owing to its ability to commodify knowledge and commoditize knowledge-oriented tasks.

Scenario 4: Eventual Substitution #

Finally, the end game of augmentation could very well be automation (substitution).

[

This may play out because of data-driven learning effects.

As workers increasingly use augmenting tech, they generate data needed for model training and fine-tuning, which constantly improves model accuracy and specialization. Eventually, the model becomes specialized enough to fully substitute the task.

Outcome: Your job itself gets substituted.

Three sources of skill premium #

Let’s get back to the central argument of this post.

AI won’t eat your job but it will eat your ability to charge a premium for it.

Below, we explore three sources of skill premium:

Skilling advantages: The skill premium that a higher skilled worker commands over a lower skilled worker.

Learning advantages: The ability to defend and grow the skill premium through continuous learning.

Managerial advantages: The additional skill premium associated with combining skilling and learning advantages with planning and resource allocation capabilities.

Let’s look at three factors that drive erosion of the skill premium.

If you like what you’re reading, feel free to share it.

#1 - Skill premium on specialized tasks #

Workers who perform specialized tasks and command a skill premium over unskilled workers will lose that skill premium if AI enables low-skilled workers to perform at par with the high-skilled ones.

As I explain in Slow-burn AI: When augmentation, not automation, is the real threat:

When technology augments skilled work and enables historically low-skilled workers to perform at par with historically high-skilled workers, such augmentation makes workers more substitutable and eventually commoditizes the job.

AI augmentation makes high-skilled knowledge workers relatively more substitutable by lowering the skill barrier to achieve the same performance.

Augmentation improves productivity and output but does so to a greater extent for those with lower skills than for those with higher skills. When this plays out, the worker becomes more substitutable as the skill becomes more commoditized.

#2 - Skill premium on learning advantages #

As explained above, skill commoditization is the first effect of AI, but that in itself isn’t unique to AI. Previous technology cycles also eroded the skill premium on specialized tasks.

AI is unique in its impact on learning advantages.

Consider the axe wielding example I take in Slow-burn AI:

Up until the start of the twentieth century, axe-wielding was a high-skilled job. In order to be any good at logging, you needed to perfect the right angle of the axe swing as well as the grip on the axe, through years of practice, that developed both muscle and muscle memory.

The invention of the chainsaw changed all of that. Low-skilled loggers, who lacked the knowledge or the muscle memory to perform well, could now perform at a much higher level of effectiveness.

The chainsaw was a technology that commoditized axe-wielding as a skill. But axe-wielding, arguably, has diminishing returns to learning once you’ve reached a certain level of skill. There is only so much further improvement achievable through learning beyond that point.

A lot of knowledge work is quite different. Knowledge work requires you to constantly keep learning.

Take healthcare as an example. The average physician goes through 5-8 years of medical school and needs to continue honing her craft over the course of a long career. Law is another field where learning advantages command a skill premium.

The skill premium here is attached not merely to skill specialization but to the ability to grow and defend that premium through continuous learning.

AI attacks this skill premium because of its ability to learn at scale:

AI compounds commoditization by continuous absorption of skills.

The more successful the AI is at augmentation, the more often it is used, the more data it captures to get trained, and the more effective it gets at future augmentation.

Take healthcare as an example:

AI commodifies and absorbs proprietary knowledge and learning advantages that workers (e.g. doctors) may have developed in a specific industry.

As AI augmentation plays out, doctors are constantly training the model driving more of their skill into the model.

In China, PingAn Good Doctor started out as a tele-health platform with AI-augmented doctors treating patients over the platform. Several years of model training later, the AI model now beats human doctors at many tasks.

The AI has moved on from augmentation to substitution.

With large language models (LLMs), the scope of knowledge that can be commodified and absorbed into a model increases further, increasing the potential for similar commoditization to play out across a larger range of jobs.

Much like Google Maps ‘absorbs’ a cabbie’s navigation skills and productizes it to enable anyone to navigate effectively, LLMs ‘absorb’ knowledge, potentially enabling ‘lower-skilled’ or ‘less-informed’ actors to perform tasks that were previously handled by ‘highly-skilled’ actors.

This is the second way AI attacks the skill premium.

#3 - Skill premium on managerial advantages #

Here’s a common trope in narratives about the impact of AI on work:

AI will always need humans in the loop.

Well, what do humans excel at?

A bunch of things, actually. Original thought, soulful (and not just creative) expression, relationships, and managerial goal-orientation, to name a few.

To actually get things done, you need one or more of the above. And technology is rarely good at substituting these. That’s why humans are needed in the loop.

Except that managerial goal-orientation may not be the sole domain of humans for long.

Enter AI agents.

AI Agents #

To define AI agents, I’ll borrow the definition proposed by one of the readers of this newsletter - Dharmesh Shah - at his new blog Agent.AI.

<code>Software that uses artificial intelligence to pursue a specified goal. It accomplishes this by decomposing the goal into actionable tasks, monitoring its progress, and engaging with digital resources and other agents as necessary. </code>

Agents are goal-seeking, and that’s what makes them different. While most technology aims at task substitution, agents go beyond tasks to seek goals.

Every agent operates with at least one **goal (**and possibly more than one).

In order to accomplish this goal, an agent needs to do three things:

SCAN: Scan and observe the current state of the environment

PLAN: Set up a plan, deconstruct the gaol into constituent tasks, which collectively transform the environment towards achievement of the goal

ACT: Act out the plan leveraging other agents and digital resources, constantly monitoring its progress by restarting from step 1 to adjust the plan in response to its changing environment.

AI agents are in stark contrast to traditional deterministic software, where programmers do the SCAN and PLAN and then encode instructions into code for performing the ACT.

In summary, AI agents are cool. They get the job done.

And they look something like this:

[

An AI agent self-portrait

Or not.

Goal-seeking as the locus of rebundling tasks #

What we’re driving at is this:

Goal-seeking has thus far been a critical reason for keeping humans in the loop even when technology substitutes individual tasks.

A human is still involved in combining machine-performed tasks with the tasks they themselves perform (human-performed tasks) tasks towards the performance of a goal.

AI agents are unique (unlike other technology) in that they can leverage goal-seeking behavior to rebundle tasks and completely replace the human-performance in the job.

Here’s how this plays out:

First, an AI agent deconstructs a goal into its constituent tasks. It analyzes these tasks to understand their nature, complexity, and interdependencies and categorizes and sequences them towards contribution to the goal.

Once tasks are categorized and aligned with goals, the agent allocates resources (including calling on other agents) towards execution.

Agents can also continuously adapt and adjust task bundling strategies based on changing environmental information. They may re-evaluate priorities, re-sequence tasks, or change the other agents they are working with. In a perfectly competitive market of agents, an AI agent should have sufficient information to map the most appropriate agent to the task.

Owing to the goal-seeking behavior explained above, AI agents re-bundle tasks towards specific goals, impacting how work is organized and executed. This restructuring occurs at both individual and organizational levels, ultimately reshaping job roles and team dynamics.

Goal-accomplishment is the real product of AI.

How AI agents erode the skill premium #

Agents may not eat your job, but they may eat your human-in-the-loop advantage.

In previous technological waves, humans often lost the skill premium associated with the task being augmented but they would retain the associated capabilities of environment scanning, planning, and resource allocation across human and technology resources.

Agents take away this managerial advantage of a the human-in-the-loop who primarily performs environment scanning, planing, and resource allocation.

Yes, it’s early days yet for agents but as models develop and as more agents get created, a perfectly competitive market of agents could replace multiple levels of managerial advantages. Low-end managerial work gets replaced initially. But over time, complex managerial work may also struggle to command a premium.

Of course, in rapidly evolving environments, resource allocation requires original thought. And some (few) humans may effectively outsmart AI there. But for the vast majority, the skill premium of human-in-the-loop gets progressively eroded as all these three effects laid out above play out.

Centralized market-making accelerates erosion #

Finally, as workers get more commoditized through AI, the work lends itself to a greater deal of centralized market-making.

Centralized market-making further accelerates the erosion of the skill premium.

To understand how this works out, let’s look at a market where this has played out at scale.

As I note in Slow-burn AI:

In the market of local transportation, the advent of Uber changed the mechanics of ‘job discovery’, or in this case the mechanics of finding your next ride. Cabbies could either be assigned jobs by the algorithm and compete with all other 5-star-rated, maps-augmented amateur drivers or they could stay out of the system and miss out on the demand coming in through Uber. Cabbies lost their negotiation power leading to a variety of effects - they had to accept rides without knowing the destination, they had to adhere to acceptance and cancellation rate metrics. They had lost not only the ability to set the price but also the agency to accept or reject work opportunities based on whether it made commercial sense.

Centralized market-making erodes the skill premium by pitting increasingly substitutable workers against each other and driving the clearing price of job allocation to a bare minimum.

Essentially, centralized markets combined with the three factors above that erode skill premium convert the job market into a commodities market seeking the lowest possible market-clearing price.

The more technology commoditizes your skills, the faster your skill premium is eroded through centralized market-making.

This leads to a cycle of continuous commoditization:

Skill absorption into AI constantly drives greater commoditization.

An ever-improving AI model, trained by a larger base of users, drives continuous commoditization of the skill.

Successful augmentation expands the training data set not just through driving greater usage among workers but by fundamentally expanding the overall base of workers training the models. A feedback loop is set in motion here where greater productization and democratization of the knowledge starts accelerating commoditization.

[

A combination of these effects eventually erodes the skill premium associated with the job.

Read the full article here:

[

]( https://platforms.substack.com/p/slow-burn-ai-when-augmentation-not)

The commoditized human-in-the-loop #

Does AI eat your job?

It doesn’t really matter. That’s really the wrong question to be obsessing over.

What’s far more important is to determine whether AI gets to eat your skill premium.

Yes, there are human advantages - original thought, soulful expression etc - which, when combined with an effective use of AI, can help command a higher skill premium.

But for the vast majority of jobs, where humans-in-the-loop largely rely on (1) skill specialization, (2) learning advantages, and (3) managerial capabilities, AI will chip away at the skill premium that those jobs command.

Eventually, the factor that really drives our engagement with our careers is the ability to own skills which grant us a skill premium.

The effects of AI on basic wage loss through AI-driven job displacement can be countered through policy (e.g. by setting up an AI tax on specific activities).

But the effects of AI on the skill premium are far more difficult to address.

And yet, we remain obsessed with the human-in-the-loop argument.

Know someone who should read this? Go ahead and share it now!